- Home

- Prelims

- Mains

- Current Affairs

- Study Materials

- Test Series

EDITORIALS & ARTICLES

EDITORIALS & ARTICLES

Promoting and Infringing Free Speech - Net Neutrality

Recently, more than 300 multi-national companies have stopped advertising on the world’s largest social media network, Facebook, in response to a call to protest the platform’s refusal to moderate hate speech.

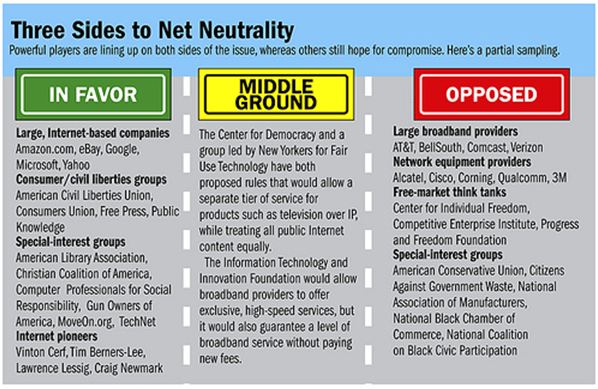

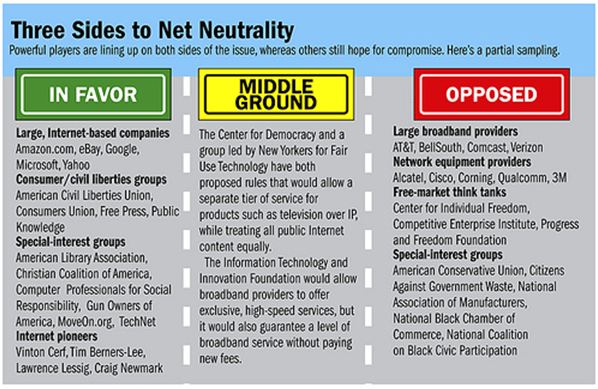

Facebook asserted that it is not ready to moderate hate speeches citing the principle of Net neutrality. With net neutrality, Internet Service Providers and social media corporations like Facebook are required to not intentionally block, slow down, or charge money for specific online content.

However, it is alleged that hate speech on Facebook has helped fuel a genocide against the Rohingya Muslims in Myanmar. Similarly, in 2019, a gunman used the social network to livestream the mosque shootings in Christchurch, New Zealand.

Thus, these incidents have restarted the debate on Net Neutrality and ways to prevent propagation of violence and hatred through social media.

Net Neutrality

Paradoxical Utility of Social Media

Paradoxical Utility of Social Media

- Network neutrality is the principle that Internet service providers (ISPs) must treat all Internet communications equally, and not discriminate or charge differently based on user, content, website, platform, application, type of equipment, source address, destination address, or method of communication.

Paradoxical Utility of Social Media

Paradoxical Utility of Social Media

- It is true that social media is effective in galvanizing democracy. Social and civil right activists use this platform quite often in garnering attention on the social issues and instances of injustice.

- However, social media also allows fringe sites and hate groups, including peddlers of conspiracies, to reach audiences far broader than their core readership.

- Facebook and similar platforms provide an effective tool for obnoxious elements to target audiences with extreme precision for propagating hatred or targeted violence.

- Rumour Mongering: Fake narratives on online platforms have real life implications. For example, recently in India, online rumours, regarding child traffickers, through popular messaging platform WhatsApp, led to a spate of lynchings in rural areas.

- Facilitating Polarisation: It enables the communalising agents to polarise people for electoral gains.

- For example, during the election campaign of recently conducted Delhi legislative assembly elections, a leader enticed crowds with the use of communalising and violence on social media platforms.

- Following this, a young man translated these words into reality by opening fire on protesters.

- This incident highlighted how the spread of hate speech through social media has real consequences.

- Social Media AI poorly adapted to local languages: Social media platforms’ artificial intelligence based algorithms that filter out hate speeches are not adapted to local languages. Also, the companies have invested little in staff fluent in them.

- Due to this, it failed to limit the ultranationalist Buddhist monks using Facebook for disseminating hate speech which eventually led to Rohingya massacres.

- Harmonising the Laws: Harmonising the regulations to check misuse of social media are scattered across multiple acts and rules.

- Thus, there is a need to synchronise the relevant provisions under the Indian Penal Code, the Information Technology Act and Criminal Procedure Code.

- Also, the draft intermediary guidelines rules should be amended to tackle modern forms of hate content that proliferate on the Internet.

- Obeying the regulation by Supreme court: In Shreya Singhal v. Union of India (2015) case, Supreme Court gave a verdict on the issue of online speech and intermediary liability in India.

- It struck down the Section 66A of the Information Technology Act, 2000, relating to restrictions on online speech, on grounds of violating the freedom of speech guaranteed under Article 19(1)(a) of the Constitution of India.

- It also gave the direction on how hate content should be regulated and the government should follow this direction, where the user reports to the intermediary and the platforms then takes it down after following due process.

- Transparency obligation for digital platforms: Digital platforms can be made to publish the name and amount paid by the author in the event that content is sponsored.

- For example, with regard to fake news, France has an 1881 law that defines the criteria to establish that news is fake and being disseminated deliberately on a large scale.

- A legal injunction should be created to swiftly halt such news from being disseminated.

- Establishing regulatory framework: Responsible broadcasting and institutional arrangements should be made with consultations between social media platforms, media industry bodies, civil society and law enforcement are an ideal regulatory framework.

- Even global regulations could be made to establish baseline content, electoral integrity, privacy, and data standards.

- Creating Code of Conduct: It can be framed without creating an ambiguous statutory structure that could leave avenues for potential legislative and state control.

- For example, the European Union has also established a code of conduct to ensure non-proliferation of hate speech under the framework of a ‘digital single market.’

Latest News

Latest News General Studies

General Studies