- Home

- Prelims

- Mains

- Current Affairs

- Study Materials

- Test Series

The neuropsychology and physics hidden in the 2024 Physics Nobel Prize

- Owing to the ubiquity of neural networks, the 2024 Physics Nobel Prize has caught the attention of a large number of people across disciplines. However, many have been left wondering, ‘Where is the physics in this year’s Physics Prize?’ Even some physicists are clueless – and surprisingly, the citation does not spell it out!

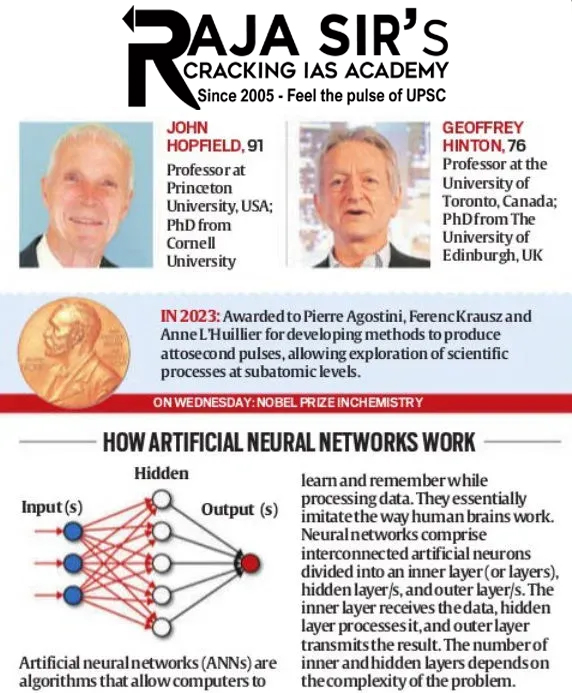

- The Nobel citation also does not say that besides a fundamental piece of physics, a path-breaking discovery in neuropsychology lies at the core of John Hopfield’s Nobel-winning proposal of an artificial neural network (ANN) that was exploited by Geoffrey Hinton (the co-recipient of the 2024 Prize) and several others to produce the wonders of Artificial Intelligence (AI). This year’s Physics Prize should interest cognitive neuroscientists as well!

- To understand the subject from its perspective, we need to go back in time and recall how it evolved, the central idea being to make computers learn, memorise, and enable associative recall.

- The quest for a machine that could compute engaged mathematicians like David Hilbert, Kurt Gödel, Alan Turing and Alonzo Church in the 1930s and 1940s to investigate the subtleties of computability of mathematical functions while many scientists strived to explore how the brain computes.

- Advances in electronics enabled John von Neumann to build the modern computer. But the progress on the brain’s front did not make much headway because the physiology of learning and memory wasn’t known well enough until 1949, when a neuropsychologist called Donald O Hebb provided the vital clue.

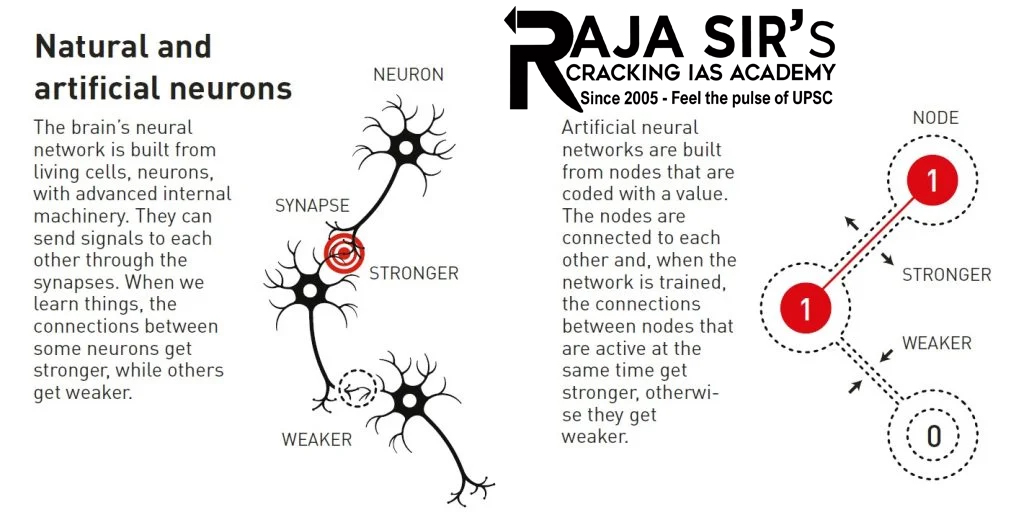

- Hebb discovered that when we are learning something, the synapses that connect the fundamental constituents of the brain, viz. the neurons change their effectiveness or efficacy in an irreversible manner like a plastic.

- This boosted the research, but the turning point came only in 1982, when Hopfield, a physicist turned computer scientist, gave a model for a neuronal network that looked biologically viable. Hopfield formulated it by adapting the ideas and the mathematical apparatus originally developed to understand a fascinating physical system called ‘spin glass’.

- A ‘spin glass’ is formed when magnetic elements – iron or manganese or chromium – mix in tiny proportions with non-magnetic elements such as gold or copper or silver.

- The ‘atomic spins’ carried by magnetic elements freeze in random directions and locations, few and far between in the host lattice of nonmagnetic elements. They interact and influence each other’s orientation in a ‘strange’ manner that leaves them ‘frustrated’ due to conflicting commands for their orientation. This produces a very large number of patterns of randomly oriented spins that minimise the energy of the spin glass.

- Hopfield likened the ‘spins’ to neurons, which like binary entities, fire or remain quiescent. The neurons interact with each other by exchanging electrical impulses and modify the connecting synapses plastically, following Hebb’s principle.

- This was mapped to the interaction between the spins of a spin glass. The energy-minimising patterns of spins are identified as memories. Information coming to be recorded triggers frantic exchanges of electrical impulses between the neurons via the synapses, which in turn get modified plastically.

- The information is saved as a pattern of firing/ non-firing neurons in the form of changes in the synaptic efficacies. The network of synapses is modified drastically as patterns pile up as memories. A stored pattern can be retrieved from this pile ‘associatively’ by presenting it in its original form or in partial or distorted form.

- Hopfield’s ANN thus represents a model brain in which memories spread over the entire network, and all of them share the same set of neurons.

- In later years, it progressed in two directions – while the overwhelming majority worked to improve the architecture of ANN for computer science applications, a few concentrated on removing its biological and cognitive shortcomings to make it physiologically more realistic.

ANNs

It has been awarded jointly to John Hopfield & Geoffrey Hinton for constructing methods that helped lay foundation for machine learning (A type of AI) using ANNs.

- ANNs are a subset of Machine Learning algorithms designed to model workings of human brain.

- ANNs consist of interconnected nodes, or artificial neurons, that process information similarly to how neurons function in the human brain.

- John Hopfield: He invented a type of neural network (Hopfield Network) which is designed to store and recall patterns, similar to how memory works.

- Hopfield network utilizes physics that describes a material’s characteristics due to its atomic spin.

- Atomic spin is magnetic moment of an atom that is caused by spins of particles that make up atoms.

- Geoffrey Hinton: He invented a method (Boltzman Machine) that can autonomously find properties in data e.g. identifying specific elements in pictures.

- Boltzmann machine learns by using examples that it may see while it works. It can sort images or create new patterns similar to what it learned.

- This network uses methods from statistical physics.

|

Role of ANNs in AI

|

Latest News

Latest News

General Studies

General Studies